In recent years, the way machines process and understand human language has revolutionized. At the heart of this transformation lies a remarkable innovation: the large language model (LLM). These models are advanced AI systems designed to comprehend, generate, and interact with human language at an unprecedented level of sophistication. Although their technical skills are based on sophisticated algorithms and large data volumes, they directly impact daily life in terms of improved search engines, virtual assistants such as Siri and Alexa, and many more.

So, what exactly is unique about an LLM, and why are they the foundation stone in artificial intelligence? This article goes deeper into what large language models are, for what purpose, how they work, and some of their practical applications.

What Is a Large Language Model?

A large language model is often abbreviated to LLM; it is one of the more focused types of artificial intelligence developed to understand and generate human language. Such models rely on using neural networks, specifically, a form known as transformers, to take in huge blocks of text information. Analyzing patterns, contexts, and interword relationships means that LLMs can forecast what comes next in a sentence, answer a question, or even come up with an essay.

Unlike other traditional language models, which depend on predefined rules or smaller datasets, LLMs are trained on massive corpora, encompassing books, websites, and other textual content. It allows them to identify nuanced meanings, idiomatic expressions, and cultural references that give their responses a more natural, human touch.

For example, when you enter a query into your favorite search engine, an LLM could interpret your question and return accurate and contextually relevant results. In applications such as machine translation, it powers applications that make cross-language communication seamless.

How Do Large Language Models Work?

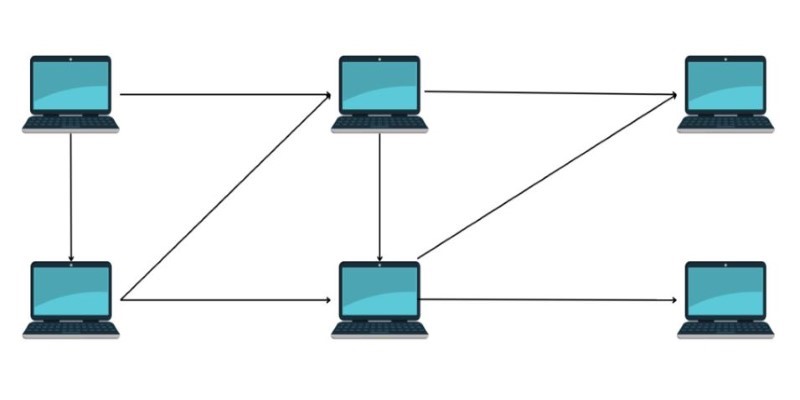

To understand how large language models (LLMs) work, it's essential to first grasp the basics of machine learning and neural networks. LLMs are built using advanced architectures, such as GPT (Generative Pre-trained Transformer), which rely on multiple layers of interconnected nodes designed to mimic how the human brain processes information.

These layers handle vast amounts of text by breaking it down into smaller components called tokens—a process known as tokenization. Tokens can represent words, phrases, or even individual characters. This allows the model to examine the relationships between them and predict the next word in a sequence or generate entire paragraphs of coherent text.

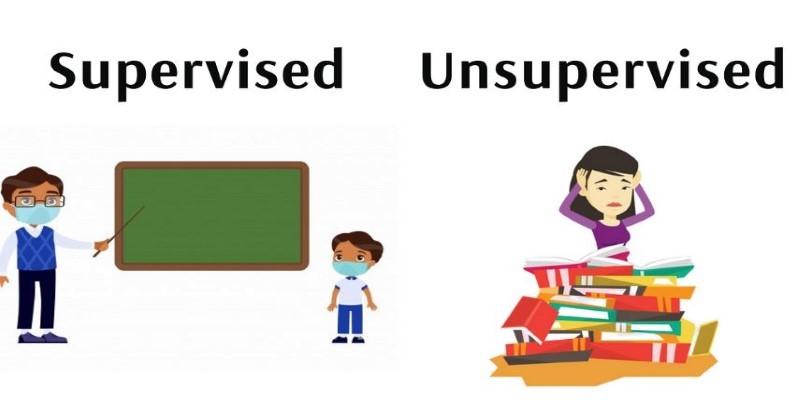

The development of LLMs involves two key phases: pre-training and fine-tuning. During pre-training, the model is exposed to an enormous dataset—ranging from books to websites—so it can learn linguistic patterns, context, and grammar. Fine-tuning comes later when the model is adjusted for specific applications, such as answering questions, summarizing information, or creating content.

The true strength of LLMs lies in their ability to generalize knowledge rather than merely memorize facts. They can infer meaning and context across diverse subjects, making them highly versatile tools used in industries like healthcare, education, and content creation. Their adaptability continues to drive innovation in human-machine interaction.

Applications and Impact of Large Language Models

The applications of large language models are diverse. They are revolutionizing industries and enhancing human-machine interactions. In healthcare, for example, LLMs assist in analyzing medical records, suggesting diagnoses, or even generating patient-friendly explanations for complex medical conditions. Their ability to process vast amounts of information quickly makes them indispensable in research and data analysis.

In education, LLMs provide personalized tutoring by answering student questions, generating study materials, or simplifying complex topics. They help bridge knowledge gaps and foster independent learning, particularly in regions with limited access to quality education.

Moreover, businesses are harnessing LLMs' capabilities for customer service automation, content creation, and market analysis. By generating human-like responses, they streamline customer interactions, saving time and resources while enhancing user experience.

However, these advancements come with ethical concerns. Critics argue that the widespread use of LLMs could lead to job displacement, misinformation, or even biased outputs if the training data contains inherent prejudices. Ensuring transparency and accountability in how these models are developed and deployed is critical to mitigating such risks.

Challenges in Developing Large Language Models

While large language models offer impressive capabilities, their development is no small feat. One of the primary challenges is the sheer volume of data and computational resources required to train these models. Training an LLM involves processing terabytes of data, demanding advanced hardware, and consuming significant energy. This resource-intensive process raises concerns about the environmental impact of AI development.

Another challenge lies in ensuring the quality and diversity of the training data. Since LLMs learn from the data they are exposed to, biases present in the training datasets can result in biased outputs. For instance, if the data predominantly represents one cultural perspective, the model might produce responses that unintentionally exclude or misrepresent other viewpoints. Developers must continuously refine and curate datasets to reduce such biases.

Lastly, the issue of interpretability remains a significant hurdle. While LLMs can generate highly accurate and human-like outputs, understanding how they arrive at specific conclusions is often difficult. This "black box" nature of AI models makes it challenging to ensure reliability and accountability, especially in critical applications like healthcare and law.

Despite these obstacles, researchers and developers continue to innovate, working towards more efficient, fair, and transparent language models that serve a broader range of societal needs.

Conclusion

Large language models (LLMs) represent a significant leap in artificial intelligence, transforming how machines understand and generate human language. Their ability to analyze vast amounts of data and produce human-like responses has revolutionized fields such as healthcare, education, and customer service. Despite their remarkable potential, challenges like bias, high resource consumption, and ethical concerns persist. As research advances, addressing these issues is essential to ensure the responsible use of LLMs. By fostering innovation and transparency, LLMs can continue to reshape technology and communication, creating new possibilities for human-machine interaction and enriching various aspects of everyday life.